A picture is worth a #ThousandWords

One Small Step Collective

Social media data analysis has come a long way since it first started showing up on marketers radars in the early years of social. Back when “likes” were kudos on MySpace, and advertisers were restricted to crude display ads, only a few companies took notice of the true value of online discussion. Those companies then started to build analysis tools that could dig deeper, beyond simple volumetrics.

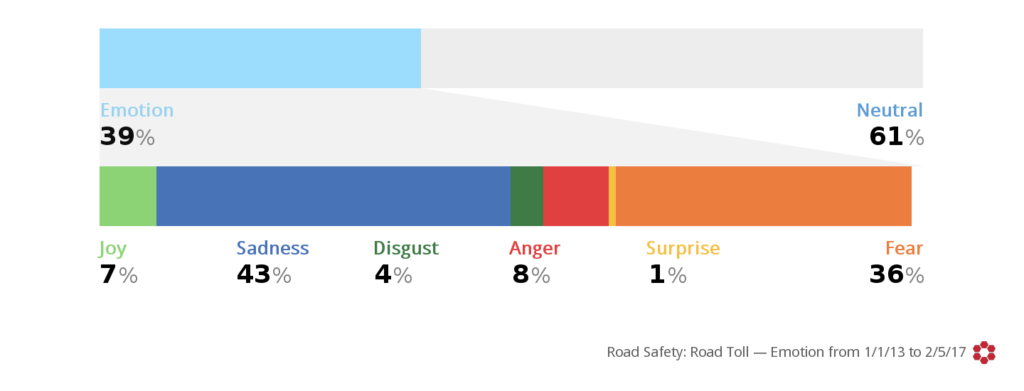

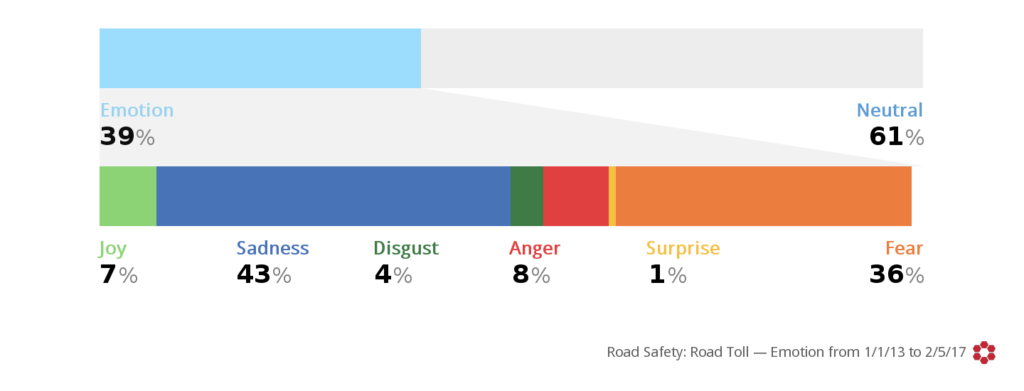

Today, there is a growing and obvious need for better data. Marketers need analysis that can measure more important details like sentiment and nuanced topic trends across both broad public data and the social media discussion.

This need has led to the development of advanced unstructured data pattern and sentiment analysis such as the BrightView algorithm we use in Crimson Hexagon. BrightView has the ability to understand a huge, unstructured social database. Using machine-learning, the algorithm looks at patterns of language and begins to group the discussions around things like topics or sentiment making the data incredibly useful from a brand or marketing point of view.

And that is just the start, the technology to discern sentiment across the social landscape continues to evolve. We recently had Senior Business Development Manager with Crimson Hexagon, Marco Grill, come out to our agency here in Melbourne to take us through the pipeline of new Crimson Hexagon developments.

It may come as little surprise that the future of social analysis lies in photo and video analysis. There are now 1.8 billion photos shared on social media daily. In terms of video, there are 819,417,600 hours or 95,394 years on YouTube alone.

The rise of camera-centric social media such as Snapchat (and copycat features now seen in every other social platform) has made it easier than ever for people to share, communicate and update without writing a word.

This presents an issue for traditional social data analytics, which relies on keywords and unstructured text to discern public sentiment towards a topic.

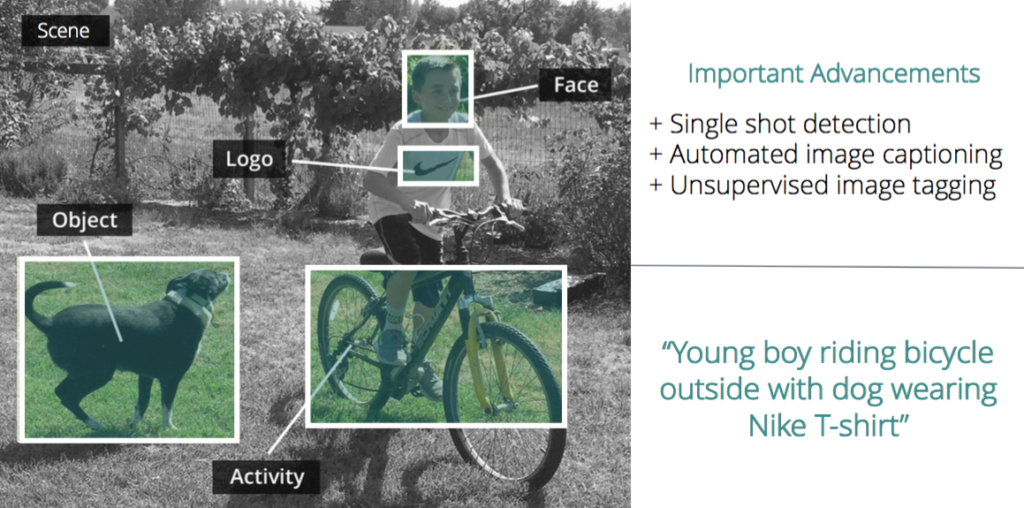

Marco showed us a short 5-second clip of some people in a restaurant eating food, with some activity in the background. He then ran this footage again with the video analysis tool that Crimson are working on overlayed. This time it labeled each of the people in the clip, the items they were holding, what was in the background – even the changing emotional state of their faces.

What does this mean? This new technology will be able to learn how a brand is being represented visually on social.

Content-awareness isn’t exactly new. Apple and Google both analyse your photos as you upload so you can search them better in the future. Try loading up a Facebook page, but stopping the browser before images can load. The broken images should show a content aware alt-text, describing what the image most likely contains. This cool feature allows the visually-impaired to use Facebook easily.

What is new and exciting is when this type of application applied to social media analysis, particularly for video. Brands can track how often their products are appearing in media, and what the general vibe is with any people pictured. The potential for deep learnings here is untapped, and these new developments are something many brands and advertisers will be super excited to try out.

It isn’t quite there yet. This next step in social analytics is still being tweaked, but it’s not too far away either. Seeing it working is enough for us here at OSSC to know this will be a bit of a game changer.